Startseite > Survey Innovations

Survey Innovations

Developments in social science research at various levels justify the demand for more and more complex and contemporary computer-based survey methods as well as associated measurement instruments. In order to take account of this requirement for innovations in structural and organizational terms, we have established the “Survey Innovations” department.

In distinction to survey methodology, the task of the department is more overarching and broader. Thus, the focus is not on the investigation of sources of error or their avoidance, minimization, or correction, but rather on the conception of new procedures and/or combinatorics of already existing procedures for the measurement of social science facts. It goes without saying that such new procedures or new combinations must then be evaluated in terms of surveymethodology. In doing so, we basically follow the principle formulated by Schumpeter in relation to innovation “the doing of new things or the doing of things that are already done, in a new way”.

Hardly any of the studies conducted by infas can do without computer-based survey instruments, although these are also increasingly used in a mixed-mode design. The requirements on the part of clients in this area are constantly increasing. It is irritating to see that even in academic research online samples are often used, which are then unreflectively declared as “representative”.

Even if “non probability samples” have their justification for certain questions, there is no way around a random sample if an exact picture of the opinions or attitudes of the population is to be collected, which is the case for most of the studies conducted by infas.

Closely related to the problem of computer-based survey instruments is, above all, the question of the samples on which the respective survey method is based. A solution of merging different samples, in analogy to the dual frame approach in telephone sampling as a “triple frame” is still unresolved and needs a scientifically sound answer because of the increasing prevalence of “non probability samples”.

In addition, new developments in technology are also changing the understanding of measurement. Much can be measured automatically, without the intervention of respondents (passive measurement) and also processed, resulting in a new relationship between measurements recorded via devices on the one hand and measurements determined via surveys on the other. This results in new challenges for the quality criterion of construct validity (reliability and validity).

These and numerous other questions, which arise from the further development of empirical instruments, new social science research questions and societal changes that sometimes make access to objectifiable data more difficult and sometimes easier, are addressed by the “Survey Innovations” department.

One topic area of the Survey Innovations department is (social) science apps. infas itself has the panel app “my infas”, which was developed for tracking and panel maintenance within the scope of panel studies. However, we still know very little about who the users of such apps are, how to use such apps as efficiently as possible, and what content needs to be presented via such an app and at what intervals. Thus, in the future, it will be necessary to test different concepts and approaches with different populations and to create an empirical basis for further developments.

This also raises the question of which other apps can be used for passive measurements, for example, and what data can be collected with them and how validly. The issue of acceptance of such apps on the part of the participants is also an aspect that has not yet been sufficiently illuminated and could possibly lead to selectivity in the measurement. A successful example of the use of a smartphone app in empirical research is the mobico app in infas mobility research.

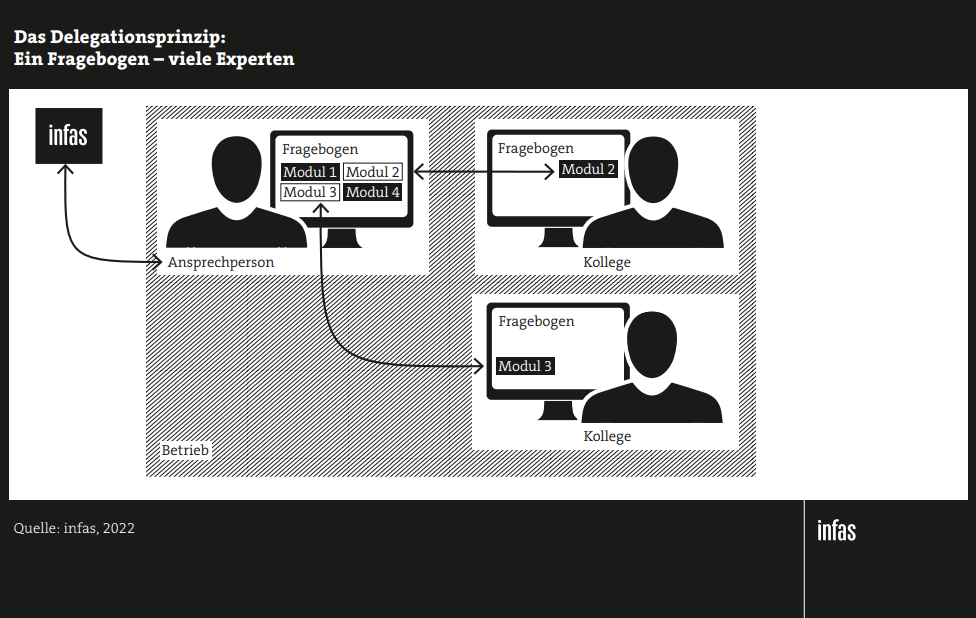

Household surveys, as well as business surveys where responses from different people need to be merged, quickly become complex. First of all, the household composition must be recorded, from which it is then derived how many and, if necessary, which persons are to be interviewed. How can several persons access a questionnaire here or how are different questionnaires adaptively created for the persons? How will access (link and password) to the questionnaire be distributed to different people? Similar questions arise in the context of company surveys. In the case of these, it is more common that information has to be obtained from different people, as not one contact person is familiar with all aspects. Here, by means of a delegation principle, individual questionnaire sections can be delegated independently by the anchor person in the company to another person in the company. The questionnaire is not linear, which poses new challenges for the filtering and presentation of filtered questions. At the same time, a nonlinear questionnaire also opens up new possibilities in the division and presentation of individual chapters of a questionnaire.

Similar questions arise in the context of company surveys. In the case of these, it is more common that information has to be obtained from different people, since not one contact person is familiar with all aspects. Here, by means of a delegation principle, individual questionnaire sections can be delegated independently by the anchor person in the company to another person in the company. The questionnaire is not linear, which poses new challenges for the filtering and presentation of filtered questions. At the same time, a nonlinear questionnaire also opens up new possibilities in the division and presentation of individual chapters of a questionnaire.

As a rule, computer-based survey instruments are programmed in a linear fashion, i.e. the order of the individual topic blocks and/or questions is fixed by the instrument. This linearity does not exist, for example, when using the delegation principle in the context of company surveys. The questionnaire must be able to be processed in any non-linear order. This applies to both the topic blocks and the individual questions. This is due to the fact that a respondent must first obtain an overview of the topics and questions in order to then decide whether he or she has all the information at hand or must delegate individual topics to colleagues. Ultimately, however, this does not preclude the questionnaire from being processed in a linear fashion, for example in the case of an interviewer-assisted survey.

Also, programming a non-linear questionnaire makes it easier to adapt a paper questionnaire. In this, the questions are also arranged in a certain order, but the order in which they are ultimately answered cannot be specified. However, the respondent can first get an overview of the topic blocks and the individual questions before starting to fill out the questionnaire. This can also be accomplished with a non-linear, computer-based survey instrument.

In CAWI surveys in particular, more and more content is to be collected that was previously only collected in a classic interviewer-administered manner. This is often due to the complexity of the instruments used for this purpose.

A good example of this is the collection of episodic data in the context of life course research. The first question is how to design the instruments so that they can be self-administered on all possible devices. In terms of content, two competing and not new approaches come into play here again: Are the various episodes processed chronologically or thematically? Or do you use both approaches and leave the decision up to the respondent for self-administered instruments? However, the instrument would then have to take both approaches into account. Another challenge is the graphical visualization of the collected data, most of which are to be checked for gaps and overlaps. Especially against the background of CAWI as a multi-device survey, this poses special challenges to the responsiveness of the survey instrument.

Online surveys are increasingly being used to collect content that was previously only collected by interviewer administration, because the complexity of the instruments required this. With such a change in methods, a simple online questionnaire is not enough. Rather, the interviewee needs support from the presence of an interviewer. Computer Assisted Self Interviews (CASI) are one possible way to do this. Analogously, these are also gaining in importance in the context of CAPI surveys. Digital survey instruments that not only query but also support the respondent are a topic in the Survey Innovations expertise area.

Online surveys have become an indispensable part of social science research. However, although long established, these continue to pose a continuous challenge in terms of survey instruments, as they are no longer limited to desktop PCs – as they were in the 2000s. Web content is now accessed via a wide variety of device types, which is why we must also speak of mixed-device surveys. infas is continuously expanding its expertise in taking into account and optimally using different end devices in the development and implementation of suitable survey instruments.

Due to digitalization and recent developments in technology, the understanding of measurement is changing. Much can be measured automatically, without the intervention of respondents (passive measurement) and also processed, resulting in a new relationship between measurements recorded via devices on the one hand and measurements determined via surveys on the other. This results in new challenges for the quality criterion of construct validity (reliability and validity).

It is still a future scenario, but in the long term it will be possible to conduct interviews without (human) interviewers. For example, developments in voice assistants and the associated artificial intelligence are advancing faster and faster. Can such systems also be used for the interaction “interview”? Would such approaches be accepted by potential respondents at all? But also with regard to self-administered survey methods, it would be conceivable to offer assistance during the survey in the form of avatars. How would such avatars have to be designed? What task could such avatars take on anyway? Here, the enormous technical challenges are followed by a series of methodological questions that need to be systematically investigated.

In all of the issues addressed by the Department Survey Innovations, another and increasingly present issue must be considered: accessibility. Many solutions – especially in the area of online surveys – do not take this aspect into account or do so only inadequately. This is mainly due to the fact that there are no uniform technical standards for implementation. The content design or content requirements are also only vaguely defined in many aspects. Likewise, accessibility currently still means a “waiver” even in technical terms. Many technical solutions are not barrier-free in themselves. However, these technical solutions often represent usability gains for non-impaired people. The main question here is how this problem can be solved technically in such a way that every instrument is barrier-free without significantly increasing the costs of instrument creation and worsening usability.

Another challenge in CAWI surveys is recruiting or identifying additional respondents through a snowballing process. Currently, approaches in the context of respondent-driven sampling (RDS) are also being discussed, for example, for the analysis of social networks.

In addition to a user-friendly solution, which above all places high demands on usability, data protection requirements must also be taken into account. However, questions about the acceptance of such procedures by the interviewees must also be taken into the focus of further developments. In addition to the enormous technical and data protection challenges, there are also a number of methodological questions that need to be systematically investigated.

Public transport plays a significant role in achieving climate targets or ensuring future mobility in metropolitan areas and rural areas alike. At the same time, public transport is in strong competition with private transport and new mobility services.